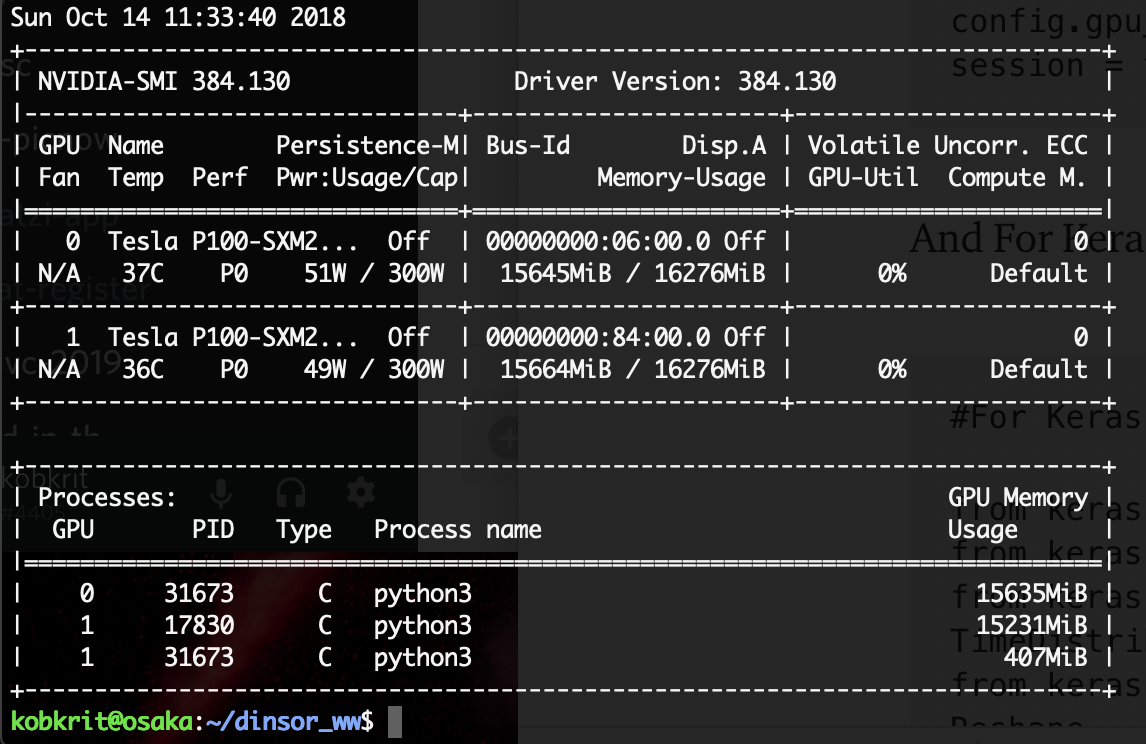

tensorflow - How is GPU still used while cuda out-of-memory error occurs? - Data Science Stack Exchange

pytorch - Why tensorflow GPU memory usage decreasing when I increasing the batch size? - Stack Overflow

![2.5GB of video memory missing in TensorFlow on both Linux and Windows [RTX 3080] - TensorRT - NVIDIA Developer Forums 2.5GB of video memory missing in TensorFlow on both Linux and Windows [RTX 3080] - TensorRT - NVIDIA Developer Forums](https://aws1.discourse-cdn.com/nvidia/original/3X/9/c/9cd5718806eafaeef328276bf189bfd2f66ca8a9.png)

2.5GB of video memory missing in TensorFlow on both Linux and Windows [RTX 3080] - TensorRT - NVIDIA Developer Forums

Memory Hygiene With TensorFlow During Model Training and Deployment for Inference | by Tanveer Khan | IBM Data Science in Practice | Medium

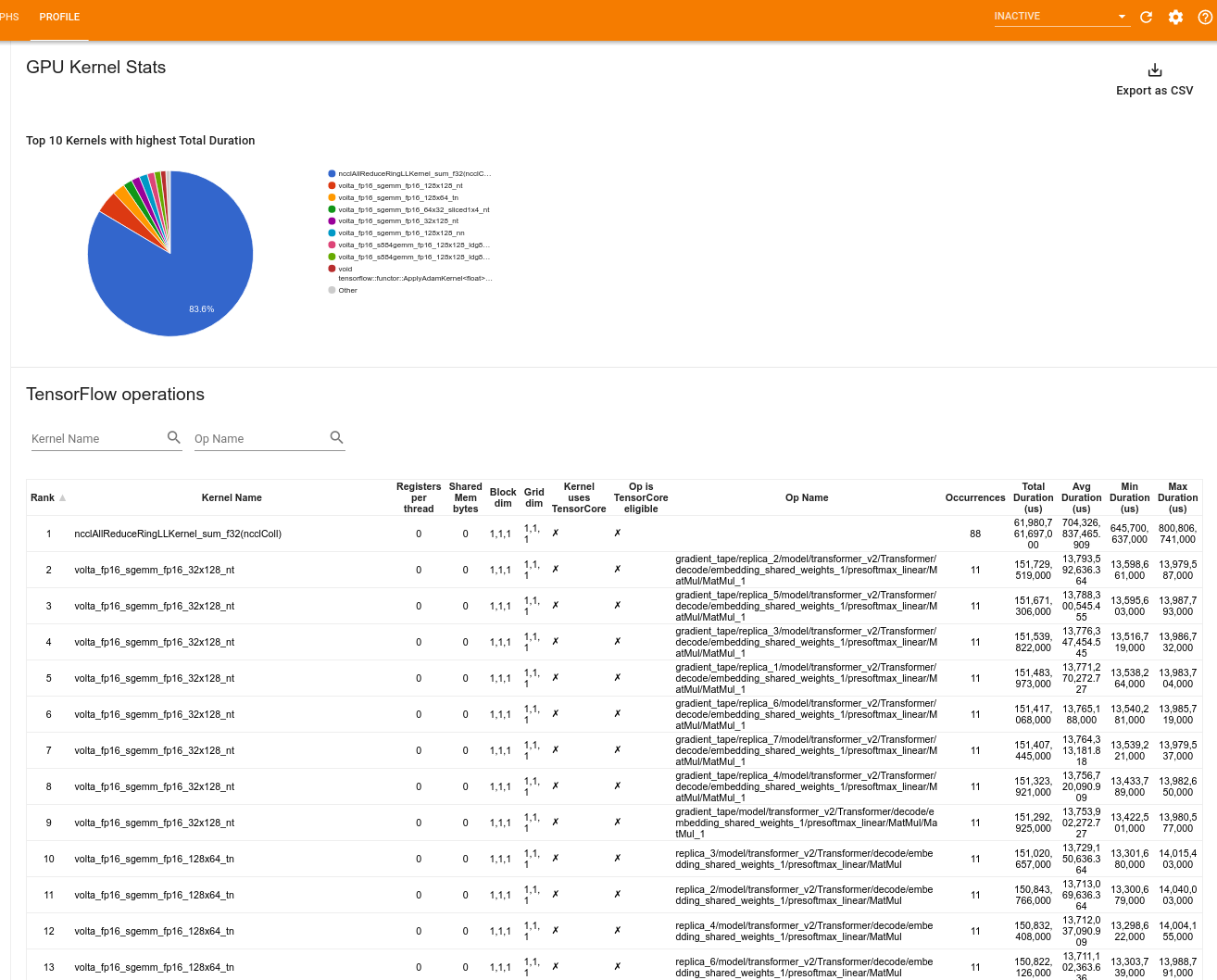

Optimizing I/O for GPU performance tuning of deep learning training in Amazon SageMaker | AWS Machine Learning Blog

I found that using tensorrt for inference takes more time than using tensorflow directly on GPU · Issue #24 · NVIDIA/tensorrt-laboratory · GitHub

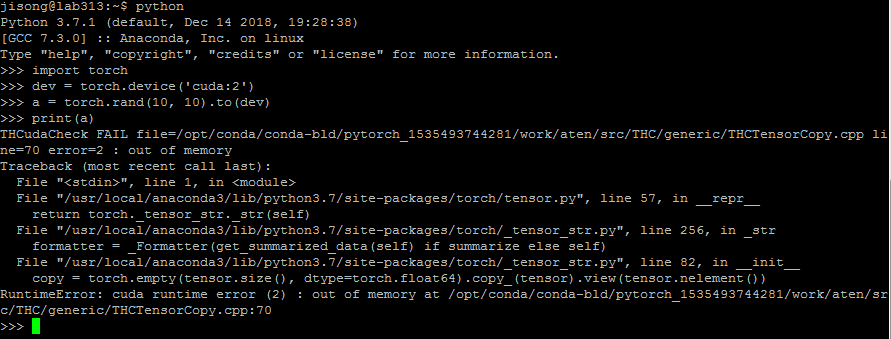

rllib] GPU memory leak until out of memory when using local_mode with ray in pytorch PPO · Issue #7182 · ray-project/ray · GitHub

pytorch - Why tensorflow GPU memory usage decreasing when I increasing the batch size? - Stack Overflow

![Solved] Tensorflow-gpu Error: self._traceback = tf_stack.extract_stack() | ProgrammerAH Solved] Tensorflow-gpu Error: self._traceback = tf_stack.extract_stack() | ProgrammerAH](https://programmerah.com/wp-content/uploads/2021/12/8024ba0d13124bc8ad0bb4a219d70d6c.png)

![PDF] Training Deeper Models by GPU Memory Optimization on TensorFlow | Semantic Scholar PDF] Training Deeper Models by GPU Memory Optimization on TensorFlow | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/497663d343870304b5ed1a2ebb997aaf09c4b529/4-Figure3-1.png)

![Solved] RuntimeError: CUDA error: out of memory | ProgrammerAH Solved] RuntimeError: CUDA error: out of memory | ProgrammerAH](https://programmerah.com/wp-content/uploads/2021/09/20210926141749969.png)